What's the difference between 3D depth sensing with structured light scanning and LiDAR? What's better for which use case and why should we care? Read on and learn more about 3D depth mapping!

A great example of structured light is Apple's Face ID. A dot projector flashes thousands of infrared dots and are read by an infrared camera. The dot readings are then used to build a depth map since closer dots will appear larger, and farther away dots will appear smaller.

Credit goes to TechInsider for the screenshots

This works really well for building very accurate depth maps at a close range (like 1 to 5 feet). Which makes sense for Face ID - the reading of your face needs to be very accurate and you'll generally hold your phone a couple of feet away from your face.

But as the the target object gets farther away, the accuracy of the structured light depth map gets worse and worse. It's harder for the device to accurately read and interpret the projected dots.

+ Excellent short range performance+ Produces very high fidelity depth maps- Poor longer range performance: Accuracy of depth map rapidly degrades the farther away the objectOn the other hand there's LiDAR, which stands for LIght Detection And Ranging, or

Laser Imaging, Detection, And Ranging, or is a mash-up of LIght and RADAR, depending on who you ask. These devices use the Time-of-Flight principle to gather distance data in a scene.

Time-of-Flight involves an emitter to project light (usually infrared) and a receiver to capture reflections of the light. The device can then calculate how far the light travelled by measuring the time between the emission and reflection - or the time of flight

LiDAR devices generally have better distance performance than structured light devices, but at the cost of detail accuracy. 3D LiDAR cameras for consumers generally perform best at distances of 0.5 to 10 meters, hence their use in environment mapping, AR, person & object detection, among other things.

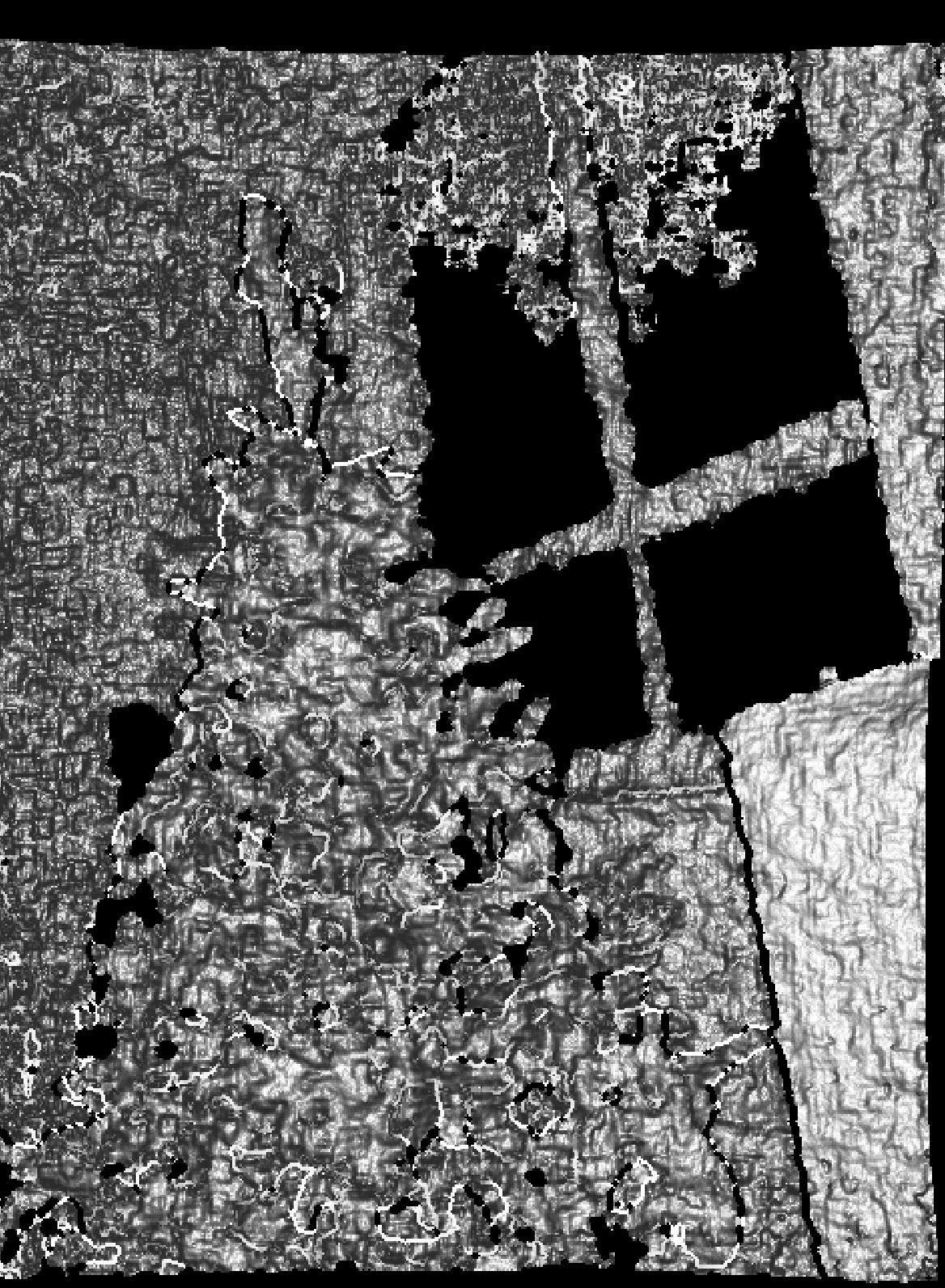

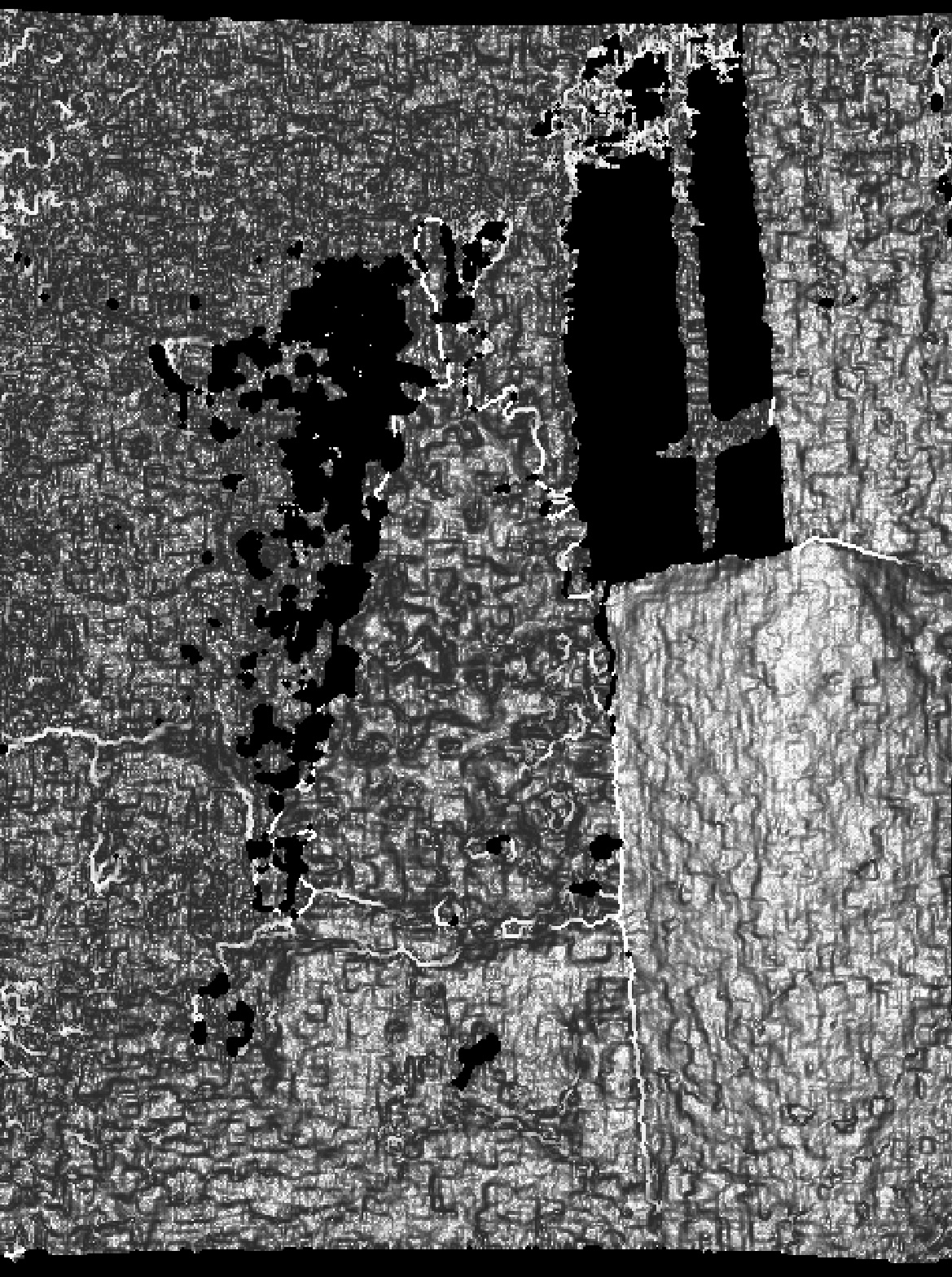

Here's how the same scene looks with the regular camera, the LiDAR camera, and the Face ID camera (structured light) camera on the iPhone 12 Pro:

Notice how it's really easy to tell the couch, Christmas tree, and presents apart in the LiDAR image, but it's hard to get a sense of anything in the structured light photo.

+ Excellent medium range performance- Not meant for fine details, better for more general distance and scene measurementsAnd there you have it. Both methods have their advantages and drawbacks. As with anything, it's important to know which tool works best for the job at hand!